Experiments with AI and Indian classical music

"Colorless green ideas sleep furiously" - Chomsky. Some thoughts and personal opinions from experiments using AI tools for music.

Disclaimer: This one might be a tad bit complex since it involves some math and philosophy.

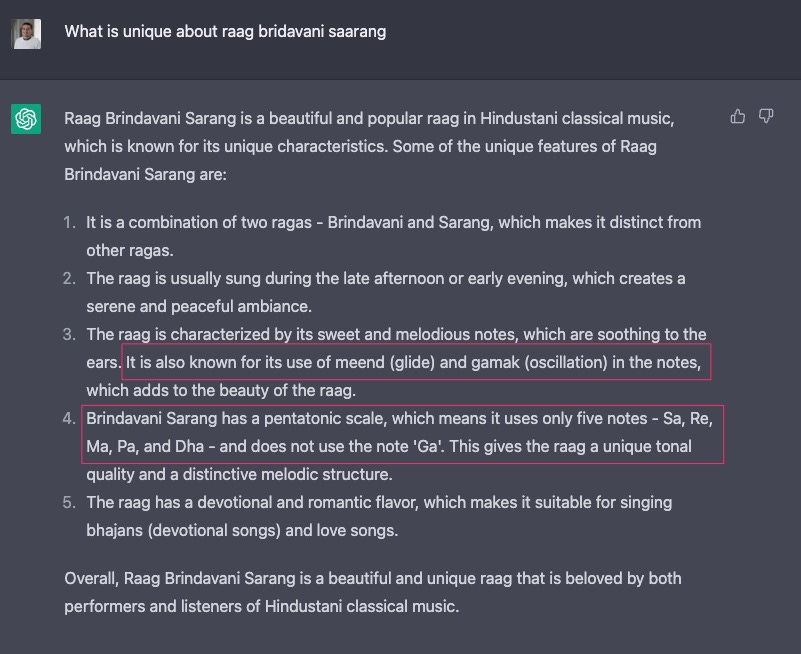

You should dispel any intrigue you have about the quality of the generated response. It’s wrong. I won’t explain why or how this particular answer is incorrect. Instead, I’ll focus on why “generative text” as a way to craft answers is a non-trivially hard problem for topics such as Indian Classical music.

English & its limitations: Models like GPT are trained using text data written by humans. Training data is usually one or a combination of user-generated content from forums, blogs, news corpus sources, Twedditbook 😬 , public domain books, transcribed audio-video (for LLMs), and other available open & proprietary data sets.

There’s very little written about Indian classical music offline or online, so there’s not a lot to go on in terms of a training set. Some seminal books exist, but that’s not in English. While sophisticated scanning and translation systems might appear in the future, so much of the music theory is invariably intertwined with the native language that I fear no accurate translation is possible.

Digression: This isn’t a new problem. For the academically curious, Searle’s Chinese room argument describes this predicament when dealing with abstractions like “intelligent responses” in a foreign language.“To imagine a language is to imagine a form of life.”

Oral traditions: Much of Indian classical music has been passed down through generations orally. There are songs we learn today whose theory was codified (loosely) 400 years back, similar to Bach or Mozart from the Western tradition. India is unique for having two separate classical music systems.

The image I use for this publication is an 18th-century miniature painting of Miya Tansen learning from Swami Haridas. There’s so much knowledge documented here about the people, climate, flora & fauna, and even the clothes the subjects wore. What’s not recorded here is what was being taught or how.I think about the people who attempted to codify music as a consistent theory sometimes. They tried categorizing musical templates into familiar, more palatable, and approachable topics. It’s a “classical” system because of the effort, but standardizing can also be limiting. Watch this excellent video about how Pythagoras broke music if you’re curious.

There are other socio-cultural issues. Indian music theory developed in the last 500 years while the country was seeing dramatic shifts in culture due to the invading Mughals and, later, the colonialists. There’s propaganda and myth embedded in popular culture and, as an extension, plenty of unconscious bias built into traditional systems of knowledge which cannot be viewed through the lens of presentism. Unfortunately, this problem extends to all forms of knowledge, not limited to music.Aesthetics: Here’s a thought exercise, write down what you felt after listening to a music performance from your favorite band/singer the last time you saw them live. Even if you are a great writer, how much of that feeling can you capture?

Understanding music theory feels similar. The theory is simple, but comprehending the message requires patiently listening to several performances and forming “deep neural relationships” in your brain about the Raag(melodic template) and the feelings it evokes.

The limitation of languages as a means of expressing human thought isn’t a new problem either. Analytical philosophers like Russel & Chomsky have tried to explain the history of thought via languages. They realized serious limits exist to what could be intelligibly said or written (or generated).The future

Contrary to my opinions about the current state of generative AI for classical music, I’m genuinely excited about the advancements in this space. We need a new language to talk about the universality of music & how it relates to human emotions. Machines might beat humans to it judging by prior work with language translation. Some of the impressive stuff I’ve seen recently include.

A company building the ability for machines to understand human expressions & emotions - commonly discernable ones to begin with.

Our smartphones & smartwatches today can sense our ambient environment (e.g. what music we’re listening to, at which location, etc) and also measure our heart rate, gaze, activity, and more. The intersection of these technologies is an exciting space to watch.

Comments and discussions are open for premium supporters! Thank you for your support so I can keep churning out articles like this. I promise this was not generated post 😄